SSIS, Dataflows, ADF (Azure Data Factory)

SSIS

SSIS is commonly known as an "ETL" product, a means to move and transform data from one point to another from various data sources (SQL Server or Oracle databases, Excel, CSV or Text files and more).

SSIS has four important components:

- Control Flow – It is the brain of the SSIS package. It helps to arrange the order of all the components like containers, tasks etc.

- Data Flow – If Control Flow is the brain, Data Flow is the heart of the SSIS Package. It allows you to extract, transform and then load data into another destination.

- Parameters – These are special types of variables. They help to ease the process of passing run-time values to SSIS packages.

- Packages – Packages are the collection of Control and Data Flow. The containers and data flow tasks in control flow and the sources and destinations in the Data flow, altogether are known as a package. In a package, you can have multiple Control Flow and Data Flow Tasks.

Dataflows

Dataflows is a self-service data prep tool for importing a wide variety of data into the Power Platform delivered as SaaS. With low or little knowledge of data transformation, you would be able to build a dataflow solution. There is a simple graphical interface for doing the transformations, scheduling refreshes, and other parts that are all designed very simply.

Dataflow includes the data transformation engine (Powered by Power Query), and the storage (Powered by Azure Data Lake Storage Gen2, or Microsoft Dataverse).

Power Query is a technology that allows you to connect to various data sources and transform data using Excel/Power BI Desktop/Dataflow. For more info, see Power Query - Overview and Learning article.

In Power Query,

- You can use the visual editor, interactive components, and ribbons to carry out over 350 data transformations like splitting, grouping, or removing duplicates.

- You could write out your transformation in Power Query’s native language: the M language.

- You can also merge and combine data tables, or queries, as Power Query calls them.

- You can write Python or R scripts.

- You can carry out some AL insights like text analysis on your data using Azure Machine Learning and Cognitive Services.

Dataflows is included with a Power BI Pro license, with certain features available only with Power BI Premium capacity.

Dataflow has applications ranging from Data Integration to Data validation. following are some of the important applications:

- Data Preparation / ETL : Data preparation involves gathering, combining, structuring, and organizing data, so it can be consumed in business intelligence, data visualization, and analytics applications. Power Query, the powerful tool from Microsoft is capable of preprocessing, profiling, cleansing, and transforming. It seamlessly pulls the data from internal systems and external sources with required security and authentication.

- Data Migration : Many businesses are augmenting or migrating their legacy applications to PowerApps due to its known potential. The data size of such applications is large in many cases. The extracted data needs to go through a series of functions in preparation and ultimately load into Dataverse. The Dataflow does this job seamlessly by mapping columns of the destination Dataverse table.

- Data Validation / Data Reconciliation: Data validation is a critical step in any data workflow, it’s often skipped over as it slows down the pace of work. Moreover, it is not straight forward process as there are many interdependent tables that exist in the database with a parent-child relationship. Dataflow brings the capability to organize data into comparable formats thereby identifying errors and inconsistencies with defined rules.

No doubt, the potential of Dataflows is invariably the best-kept secret in the Power Platform. However, there are always downsides to every great tool. Following are a few Pros and Cons of Dataflow, one has to consider while implementing.

Pros :

- Dataflow in Power Platform does not need an additional license

- The tool is friendly and intuitive, any citizen developer can easily use

- The Power queries in Dataflow, Excel, and Power BI are the same. They are simple to transfer from one platform to another

- Dataflow can be a part of the Power Platform Solution, hence it is easy to migrate it to different environments along with other components

- It can handle a massive amount of data, unlike Power Automate which throttles if a number of API calls per minute or day cross the threshold limit.

- It is easy to refresh Dataflow either manually or with the schedule. Incremental refresh gives an edge

- Dataflow executes in the cloud and an execution report is available for each run hence there is no need to monitor runs, unlike Excel Power Query

- The tool handles a great level of parallelism and hence it takes a considerably short time compared to Power Automate or other integration tools

- There are many text operations available in Power Query which makes users parse the data easily

- Can load the data from Cloud, Saas, On-premises data sources, and even personal computers (with authentication)

Cons :

- Microsoft has not yet enabled sharing ownership of the dataflow

- Environment variables cannot be used in Dataflows

- Users can’t add files or attachments to Dataverse while loading the data

- There is no guidance on the optimal number of entities in the dataflow

- Dataflow has a refresh limit of two hours per entity

Azure Data Factory

Azure Data Factory is a cloud based ETL service for data integration and data migrations. It enables users to develop data-driven processes to orchestrate data movement and transformations. Azure Data Factory helps you to create and schedule pipelines that can get data from several different data sources. It is also used to give meaning to big data stored in a storage system by helping in data movement and performing transformations on big-scale data.

ADF has four key components:

- Pipeline – Contains the tasks you want to execute. It defines the complete workflow, like what tasks should be performed and in which order.

- Activity – These are the individual steps inside a pipeline, where each activity performs a single task. They can either be chained or run in a parallel manner. They control the flow inside a pipeline.

- Datasets – Collected data is required as an input for ETL process. These are views that represent databases, files, or folders.

- Linked Services – These are basically the connection strings used to connect data sources and services and to authenticate them.

Reference: Getting Started with Azure Data Factory

|

Pros |

Cons |

|

|

SSIS |

·

Able to handle data from a variety

of data sources. ·

Provides transformation

functionality ·

C# or VBA can be used to extend its

functionality ·

Easy to learn and user-friendly ·

Debugging capabilities are great,

particularly during flow execution. |

·

Package execution report can only

be seen through Management Studio. ·

Running multiple packages in

parallel is difficult. Slow Evolution ·

Working with unstructured datasets

might be difficult. |

|

Azure Data Factory |

·

Provides a server less solution

eliminating the mundane tasks of maintenance and update of software. ·

Supports integration with multiple

3rd party connectors. ·

Supports long and time-consuming

queries. ·

Highly scalable and cost effective ·

Creating pipelines schedules is

much easier |

·

Less native transform functions as

compared to SSIS. ·

Does not have intelligence or

debugging tools ·

Needs to maintain perfect billing

strategy or else will lead to excessive cost. ·

Lack of flexibility as compared to

other ETL tools, e.g., C# script component. |

SSIS can be very fast, if designed correctly. Since most transformations (such as data conversion, adding new columns using expressions, splitting the data based on conditions etc.) are done on data in memory buffers, it goes very fast. However, some transformations like sorting need to read all the data in memory first before they start to output any rows. This means that an SSIS package can grind to a stop if you're not careful. You can find some performance best practices in the following tips:

- Improve SSIS data flow buffer performance

- Semi-blocking Transformations in SQL Server Integration Services

If your data flow has no blocking transformations, performance is typically defined by either the read speed of the source or the write speed of the destination. If you use SSIS as an ELT tool, meaning most transformations are not done in SSIS itself but rather in a database engine for example, SSIS doesn't have much impact. SSIS can then be run on a lightweight server. The number of CPU cores will determine how much tasks SSIS can run in parallel:

#tasks in parallel = #cores + 2

SSIS is a great orchestrator, since it allows you to run SQL statements in parallel, which is not possible in a stored procedure or in a SQL Server Agent job.

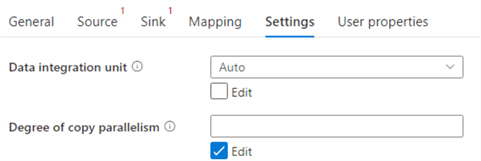

ADF is a cloud tool and thus benefits from the elastic nature of the cloud. When using the Copy Activity, ADF will take care of scale and parallelism automatically when using the default settings:

Data flows in ADF use Apache Spark behind the scenes and it has some optimization features such as partitioning. If a data flow starts up for the first time, the Spark cluster needs to be started as well which brings some additional overhead. If you have long running jobs this overhead is negligible, but for small datasets it's possible the cluster start-up time is much longer than the actual runtime of your data flow. This is why ADF data flows are more suited for bigger data sets while SSIS is better suited for small to medium data sets.

It's clear that in some use cases it's straight forward to choose which tool to use. If your project is going to be fully in the cloud, ADF seems to be the obvious choice. If everything is on-premises, SSIS seems a no-brainer. However, there are some interesting use cases where we could choose for a hybrid approach.

SSIS in the Cloud

You can run SSIS packages inside ADF by using the Azure-SSIS Integration Runtime. This is a cluster of virtual machines, managed by ADF, where you can execute SSIS packages. You pay for each second the cluster is running. The SSIS project itself is stored in an SSIS catalog in either Azure SQL DB or in Azure SQL Managed Instance.

"You've captured the essence of the topic perfectly. Great job!"

ReplyDeleteClinical Research Courses in Banglore

Clinical Research Courses in Nagpur

Clinical Research Courses in Amravati

Excellent post! Learned something new today.

ReplyDeleteMedical Coding Courses in Banglore

Medical Coding Courses in Pune

Medical Coding Courses in Nagpur

Medical Coding Courses in Amaravati

"This gave me a fresh take on the topic. Appreciate the value!"

ReplyDeletePharmacovigilance Courses in Mumbai

Eligibility for Digital Marketing Courses

Career in Pharmacovigilance